Key Highlights

- Google launched the efficient Gemini 1.5 Flash model

- Android 15 will feature advanced AI tools, including scam alerts and homework help

- Google’s search now offers AI-generated summaries

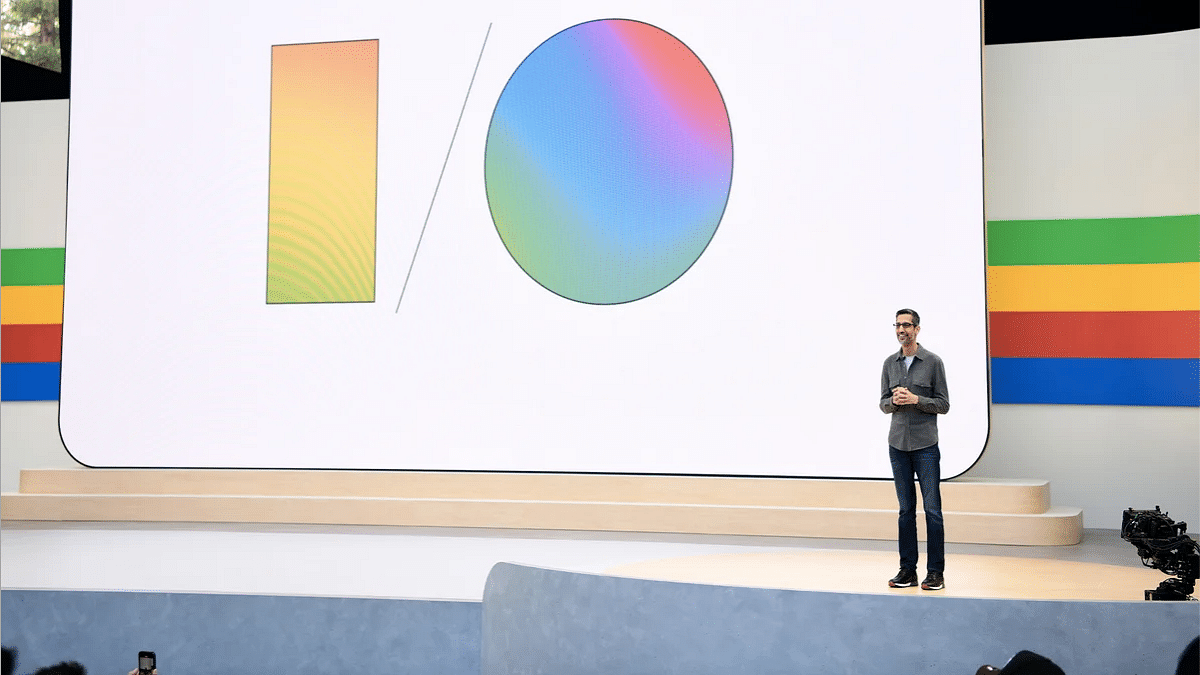

Google’s annual developers conference, Google I/O 2024, unveiled numerous groundbreaking innovations, emphasizing the integration of AI across its platforms. Here’s a rundown of the top announcements. Also Read | Google I/O 2024: Trillium Takes Center Stage As Pichai Announces Sixth-Gen TPUs

Gemini 1.5 and Gemma Models

Google introduced the Gemini 1.5 Flash language model, which is designed to be lightweight, fast, and efficient. With a context window of one million tokens, it excels in handling high-frequency tasks that require quick responses.

Additionally, Google unveiled enhancements to the Gemma AI model, now boasting 27 billion parameters, optimized by TPUs and GPUs for better performance and efficiency. A new vision-language model, PaLI-3, will also be added to the Gemma family, set to launch in June.

AI-Powered Google Photos and Search

The new “Ask Photos” feature in Google Photos leverages Gemini’s multimodal capabilities, allowing users to locate specific images with simple prompts. For example, asking for pictures from a particular event will instantly bring up relevant photos from the user’s gallery.

Google is also enhancing its search engine with AI-generated summaries and overviews for search topics. This new feature, initially tested in the Google Search Generative Experience (SGE), will now be available for dining and recipe searches, with plans to expand to other categories.

AI Features in Android

AI is deeply integrated into the upcoming Android 15 update. Features include Circle to Search, which helps students solve math and physics problems step-by-step. Gemini Nano, a lightweight on-device AI model, will now understand multimodal inputs like sights and sounds, in addition to text. It will also provide real-time scam alerts during calls by detecting suspicious conversation patterns.

Furthermore, Gemini will overlay on top of different apps, allowing users to interact with the AI assistant while using other applications. For instance, users can ask questions about a YouTube video or drag AI-generated images directly into Gmail.

Firebase Genkit

The new Firebase Genkit aims to simplify the development of AI-powered applications in JavaScript/TypeScript, with support for Go. This open-source framework will assist in content generation, summarization, text translation, and image creation.

LearnLM

Google introduced LearnLM, a generative AI model family fine-tuned for educational purposes. Developed in collaboration with DeepMind, LearnLM will provide conversational tutoring across various subjects.

Project Astra

Project Astra, Google’s new AI agent, is poised to compete with OpenAI’s GPT-4. Demonstrated capabilities include identifying objects, explaining code, and even locating personal items like glasses. Project Astra hints at future enhancements to Google Lens, potentially integrating with smart devices like smartphones and smart glasses.

Also Read | Project Astra Announced At Google I/O 2024: Key Highlights

VEO

Google also revealed VEO, a text-to-video generation model capable of creating 1080p resolution videos with diverse cinematic styles. Users will have enhanced control over video creation, utilizing terms like “timelapse” or “aerial shots.”

Changes to Google Play

Google Play will see new discovery features, updated ways to acquire users, and enhancements to the Play Points program. Updates to the Play SDK Console and Play Integrity API are also on the horizon, improving the overall app ecosystem.

With these innovations, Google continues to push the boundaries of AI, making technology more helpful and accessible to everyone.